at last, we provide an example of a complete language design: a deep sequence model backbone (with repeating Mamba blocks) + language model head.

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on A different tab or window. Reload to refresh your session.

If handed along, the product takes advantage of the previous state in every one of the blocks (that will give the output for the

arXivLabs is usually a framework that allows collaborators to acquire click here and share new arXiv features right on our Site.

This model inherits from PreTrainedModel. Examine the superclass documentation for that generic approaches the

it is possible to e mail the internet site operator to let them know you had been blocked. make sure you involve what you had been executing when this web site arrived up along with the Cloudflare Ray ID discovered at The underside of this web site.

The efficacy of self-awareness is attributed to its power to route facts densely within a context window, allowing for it to design complex knowledge.

both of those men and women and organizations that perform with arXivLabs have embraced and recognized our values of openness, Neighborhood, excellence, and consumer knowledge privateness. arXiv is committed to these values and only performs with companions that adhere to them.

Foundation models, now powering most of the interesting applications in deep Finding out, are almost universally based upon the Transformer architecture and its Main attention module. quite a few subquadratic-time architectures including linear notice, gated convolution and recurrent types, and structured point out House models (SSMs) are created to deal with Transformers’ computational inefficiency on prolonged sequences, but they've not done in addition to interest on critical modalities including language. We determine that a critical weak point of this sort of types is their incapability to complete articles-based mostly reasoning, and make quite a few enhancements. very first, just letting the SSM parameters be features of the enter addresses their weak point with discrete modalities, enabling the product to selectively propagate or forget details along the sequence size dimension depending upon the present-day token.

It was firm that her motive for murder was cash, considering the fact that she had taken out, and collected on, life insurance plan insurance policies for every of her dead husbands.

perspective PDF HTML (experimental) summary:point out-Area designs (SSMs) have not long ago shown aggressive efficiency to transformers at huge-scale language modeling benchmarks although reaching linear time and memory complexity like a perform of sequence size. Mamba, a recently produced SSM product, demonstrates remarkable efficiency in each language modeling and prolonged sequence processing jobs. concurrently, combination-of-professional (MoE) versions have shown impressive overall performance whilst significantly reducing the compute and latency costs of inference at the expense of a bigger memory footprint. In this particular paper, we current BlackMamba, a novel architecture that mixes the Mamba SSM with MoE to acquire the many benefits of the two.

Mamba stacks mixer levels, that are the equivalent of notice layers. The core logic of mamba is held while in the MambaMixer course.

Submit benefits from this paper for getting state-of-the-art GitHub badges and aid the community Evaluate benefits to other papers. approaches

an evidence is that many sequence types are not able to correctly disregard irrelevant context when vital; an intuitive illustration are world convolutions (and general LTI types).

This dedicate would not belong to any department on this repository, and will belong to the fork beyond the repository.

Haley Joel Osment Then & Now!

Haley Joel Osment Then & Now! Mara Wilson Then & Now!

Mara Wilson Then & Now! Lark Voorhies Then & Now!

Lark Voorhies Then & Now! David Faustino Then & Now!

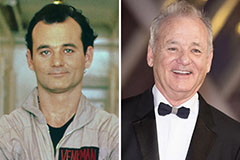

David Faustino Then & Now! Bill Murray Then & Now!

Bill Murray Then & Now!